🌊 AI Voice Agents

The Rise of Conversational Intelligence

👋 Hey, I’m Ivan. I write a newsletter about startups and investing. I share market maps, playbooks and tactical resources for founders surfing tech waves.

🌊 AI Voice Agents

2025 is going to go down as the year of Agents, but also Voice AI.

We’ve been digging into voice and done a few investments in the space (HappyRobot, Rauda, Konvo, and Altan which just partnered with ElevenLabs). There’s a lot of emerging tech that makes this wave worth surfing.

So lets break down why now and how big this could be:

1. Voice is the most natural interface, and it’s (finally) working

The most human interface has been the most painful one to use. Until now.

Humans love to talk. It’s how we build trust, handle urgency, feel heard.

But voice tech has always been broken: clunky tech, dropped calls, bad bots, endless hold music, and a few other horror stories we’re all familiar with.

AI changed that. For the first time, machines can listen, think, and talk back. All in real-time. Simple to the user. Wildly complex under the hood.

But it unlocks real stuff:

SMBs miss over 60% of inbound calls → voice agents can pick up 24/7

Customers don’t want to wait → AI doesn’t sleep

Agents can now schedule, qualify, renew etc.

And this goes beyond call centers.

For enterprises: voice agents directly replace human labor, often 10x cheaper, faster, and more consistent.

For consumers: voice feels native. It’s faster than typing, more intuitive than apps, and perfect for things like coaching, language learning, or even companionship.

For builders: voice is now a wedge. Infra is maturing. What matters next is the application layer: the workflows, verticals, and GTM that ride on top.

Bottom line: voice isn’t just working, it’s working better.

And it’s likely about to change how we work, buy, and communicate.

2. We got here after 5 tech waves:

“Please press 1 for rage”

Wave 1 — IVR Hell (1970s–2000s): Rigid phone menus (“Press 1 for billing”) defined early voice tech. Still a $5B+ market, despite being universally hated.

Wave 2 — STT Gets Usable (2010s–2021): Speech-to-text finally worked well enough for business. Gong turned sales calls into structured data. Google’s STT APIs brought real use cases online.

Wave 3 — The Whisper Moment (2022): OpenAI open-sourced Whisper, pushing transcription toward human-level accuracy. Suddenly indie devs could build high-quality STT into apps, free.

Wave 4 — Voice 1.0 (2023–early 2024): Cascading stacks emerged:

Voice → Text → LLM → Text → Voice. ChatGPT + ElevenLabs made agents sound decent, but latency sucked. Brittle UX, long gaps, awkward timing.Wave 5 — Speech-Native (2024–2025): Speech-to-Speech flips the stack. GPT-4o handles voice input/output natively. 300ms latency, emotion, interruptions. Moshi runs locally, full-duplex. Hume adapts tone in real time.

3. A new Voice Stack makes applications viable

Better infra unlocked faster iteration.

Voice agents used to be a full-stack nightmare.

You had to wrangle real-time audio, transcription, latency, barge-ins, TTS quality, and orchestration logic, just to ship a mediocre demo.

But in the last 18 months, a new modular stack emerged. Infra finally caught up.

Now, each layer has best-in-class players:

Models → GPT‑4o (speech-native reasoning), ElevenLabs (TTS), Deepgram (fast STT), Moshi (open-source S2S)

Infra → Vapi, Retell, Hume, LiveKit handle orchestration, emotion, memory, interruptions.

Apps → Examples like Rauda (CS), Konvo (CX) and a big wave we’ll discuss next.

4. CS, Sales & Recruiting are leading the charge

These use cases are predictable, repetitive, and already voice-native.

You don’t need to convince anyone to “try voice” as it’s already how they operate:

Customer Support → Automate FAQs, renewals, triage, support tickets etc.

Sales → lead enrichment and lead gen, follow-ups, co-pilots etc.

Recruiting → Building pipeline, running interviews

With a stable infra and ripe jobs to be done, founders have started to go vertical:

Healthcare → Follow-ups, scheduling, insurance calls (i.e. HelloPatient)

Financial Services → Loan servicing, collections (i.e. Salient, Kastle)

SMBs → Booking, lead capture, customer follow-up (i.e. Goodcall, Numa)

5. YC’s latest batches are packed with Voice AI

Healthcare, Sales, HR, Retail Ops, and Productivity.

Spring 2025 batch was filled with application layer voice agent companies:

Kavana – AI sales rep for distributors.

Trapeze – AI-native Zocdoc, likely includes voice booking.

Novoflow – AI receptionist for clinics.

Lyra – Voice-aware Zoom for sales.

Nomi – Copilot that listens to sales calls.

Willow – Voice interface replacing your keyboard.

Atlog – Voice agents for retail stores.

SynthioLabs – Voice AI medical rep.

VoiceOS – Automated voice interviews for hiring.

6. The Market Map (2025) is growing fast

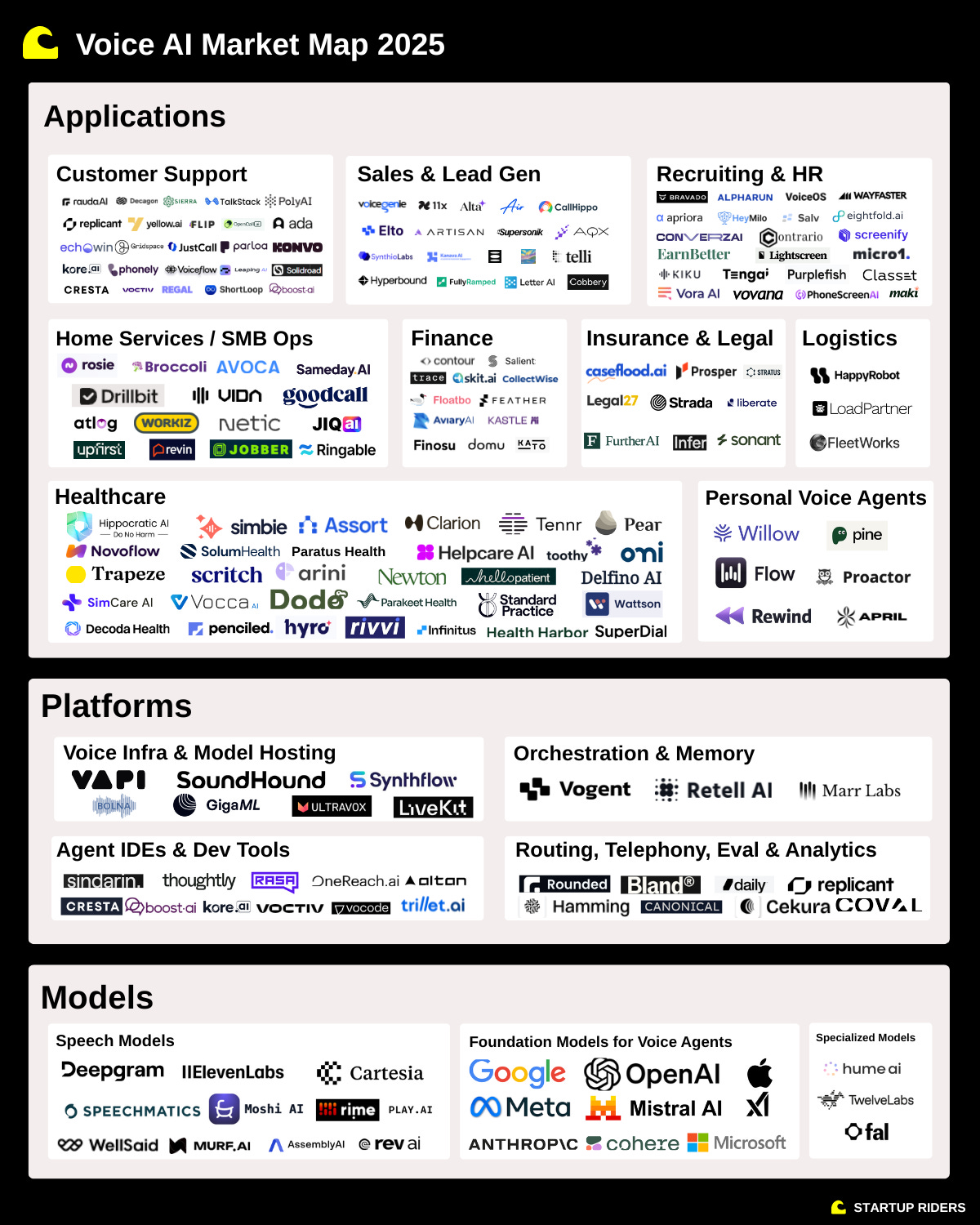

This is where the stack comes together. We mapped out 100+ companies across the Voice AI landscape — models, infra, and apps.

Here’s how to decode it (and what founders need to know):

So, what do each of these fancy boxes actually mean?

Applications → Where Value Happens

This is what users see. End-to-end agents that don’t just talk, they do the full job (hopefully). Support calls. Sales outreach. Healthcare follow-ups. Recruiting screens.

Clear ROI, vertical focus, built on everything below.

Platforms → The Engine Room

Even smart agents break without strong infra. This layer makes them usable in the real world:

Voice Infra: Handles real-time audio so that it is fast, stable, low-lag.

Orchestration & Memory: Manages the flow of the convo, remembers context, avoids awkward pauses and so on.

Dev Tools: Where builders design, test, and improve agents.

Eval & Routing: Tracks performance, handles handoffs, plugs into phones.

Together, platforms turn models into working products.

Models → The Brains

Every voice agent needs three core abilities: 1. Understand speech 2. Think through a response and 3. Talk back naturally. Each type of model handles a piece of this:

Speech Models: These turn voice into text (ASR) and text into voice (TTS). They’re the ears and mouth and help agents hear and speak clearly.

Foundation Models: These are the reasoning core. LLMs that take input (usually text) and generate answers. Most voice agents today still rely on text-based LLMs like GPT. But speech-native ones are coming, skipping transcription altogether.

Specialized Models: These models add extra skills: Emotion detection, video parsing, memory compression, etc. They’re like plug-ins that expand what an agent can sense or do.

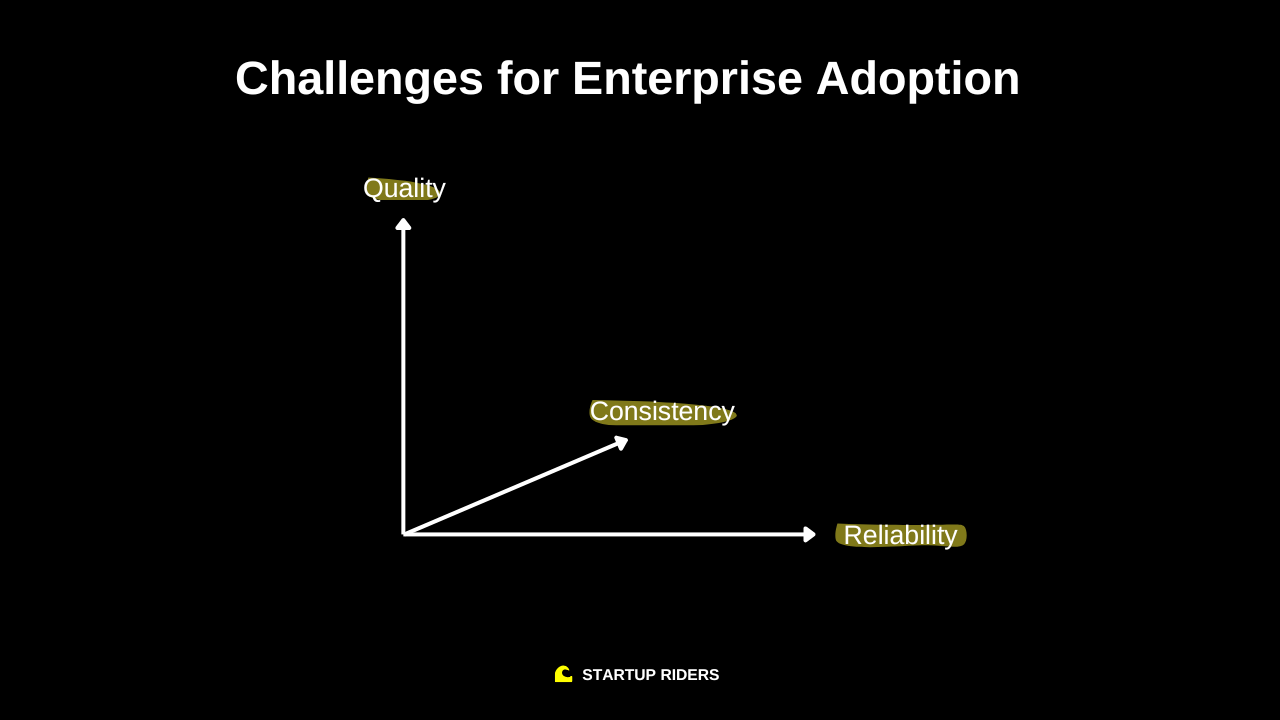

7. There are still challenges (and opportunities) for Enterprise + Consumer adoption

Infra turns voice agents from fragile hacks into trusted products. You can’t fake reliability. And in consumer settings, privacy is part of the infrastructure.

The leap from cool demo to real product comes down to one thing: trust.

And trust isn’t abstract, it’s built on three pillars:

Quality → it sounds human, not robotic

Consistency → it doesn’t stall, freeze, or lose the thread

Reliability → the call doesn’t drop

Most voice agents still fail on one of these. They time out. Hallucinate. Or freeze mid-call. That’s why infra is the real bottleneck and where most hard problems live:

Latency matters most. Sub-300ms roundtrip is the bar for feeling natural. Today’s best stacks still hover around ~500ms.

Fail-safes are essential. Enterprise use cases require fallback logic: retries, handoffs, memory resets, and graceful exits.

For consumers, privacy adds another layer. You’re not just earning trust but earning access. That’s why we’re seeing:

Offline-first agents (Moshi) → no cloud, no compromise

Emotion-aware models (Hume) → tone that adapts to yours

Multimodal input → richer UX, less brittle conversations

And voice-native models (S2S) offer a step change. They handle input, reasoning, and output in one shot. The core problems still ahead:

Interruptions → talking over users without cutting them off

Overlap → handling speech when both sides talk at once

Self-awareness → recognizing the agent’s own voice

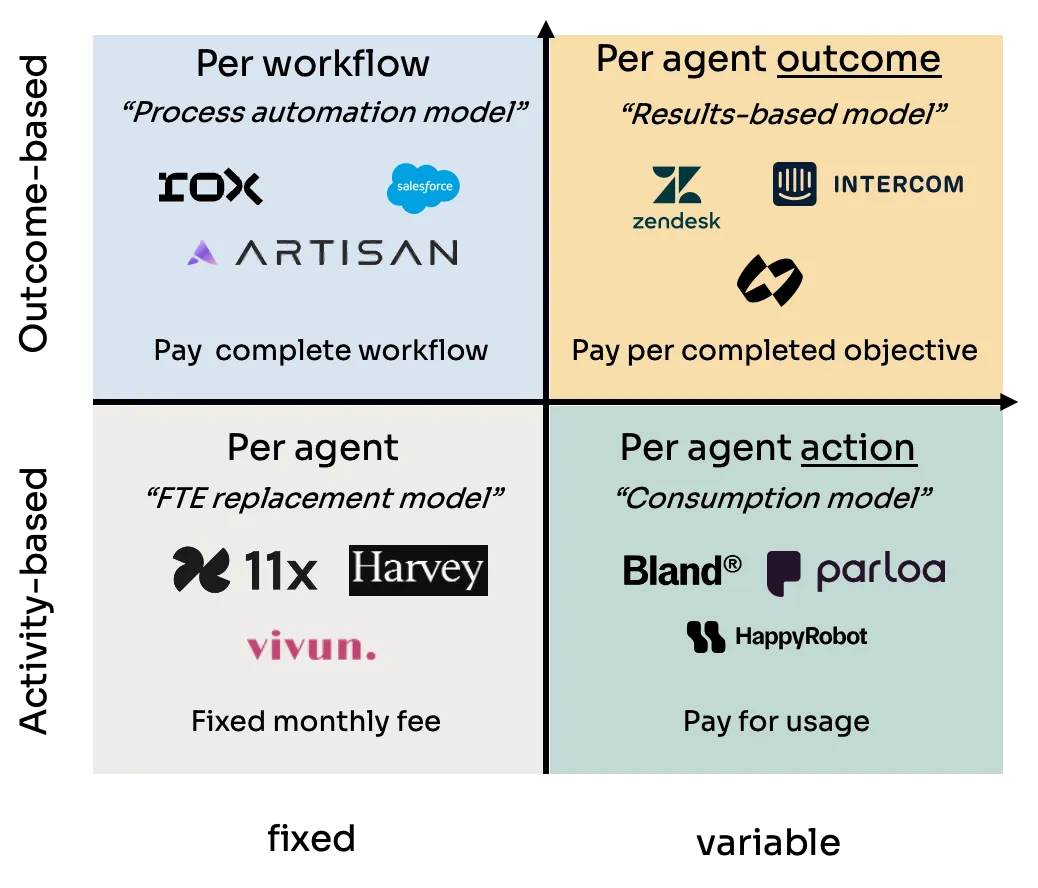

8. Voice is also redefining pricing

From price per minute → fees + usage-based pricing or outcome-based pricing?

Traditional SaaS priced by seat or usage minutes. Voice AI is different: pricing tends to be per action ("book an appointment," "collect payment"), not per user or per hour. Why?

Infra costs scale with usage: ASR + LLM + TTS per call

Customers care about outcomes, not time spent

Investors want scalable unit economics tied to delivered value

Startups are packaging this into new pricing structures:

Outcome-based pricing (i.e. successful scheduling or payment collection)

Tiered usage pricing based on call volume

Performance-based pricing tied to customer KPIs (like reduced missed calls)

These structures sit on top of different pricing models, as Kyle Poyar outlines in a great 2x2 framework:

9. Voice is still an underrated market

Voice is a wedge but can also be seen as a platform shift.

Every shift looks obvious in hindsight. Here’s what’s being missed:

1. Voice unlocks latent operational demand: Every missed call is uncaptured intent. Voice agents don’t just “answer”, they turn hidden demand into structured inputs for ops, sales, logistics, and more.

2. It’s labor arbitrage embedded in a better interface: Most tasks voice agents do aren’t “intelligent”, they’re repetitive, patterned, and emotionally neutral. That’s exactly why they’re automatable.

3. Voice creates a new interface layer on top of legacy systems: Old workflows assumed form inputs. Voice bypasses that, using language as the interface.

4. Every industry will have its ‘voice-native’ format: Just like mobile design reshaped product behavior (swipe, tap, geo), voice-native will reshape turn-taking, interrupt handling, memory/recall and emotional calibration.

5. Model selection becomes strategy: Startups will be forced to pick:

Closed vs open models

Local vs cloud inference

Multilingual, multimodal vs ultra-specialized

We’re actively investing in Voice AI. If you’re building, I’d love to hear from you - please DM or reply to this email.

10. Follow the White Rabbit 🐇🕳️

🌊 Bonus: My Substack Tech MBA

Thanks for reading!

If you enjoy Startup Riders, I’d really appreciate a share - see you next month! 🤙