🌊 Spanish Generative AI

Hello! I’m Ivan, from jme.vc. Join >5.3K entrepreneurs learning the best of what other founders have already figured out in under 3 minutes, 1-2x / month.

Summary

This week’s good stuff for your startup brain includes:

🌊 Generative AI— in layman’s terms.

📈 Thinking — when does remote work for startups.

💰 Deals - everything you need to know about Spanish dealmaking.

🌊 Spanish Generative AI

Although Generative AI is likely (maybe?) early and overhyped, it is important to understand its origins and map out a few simple frameworks to follow its evolution.

I remember working at Bloomreach in 2014, a b2b SaaS startup that leveraged Natural Language Processing - where a lot of this (similar?) early hype was already present. We talked about “Human + The Machine” with early customers.

That was 9 years ago, and although the field has and is evolving very rapidly, when it comes to generative AI - despite its impressive rate of development - we may still be early, but who knows.

If we throw the crystal ball out of the window (as we should), looking at reality on the ground we see some early examples of real traction both on the application (some with >$100M in revenue) and infrastructure realms (the big 5 making moves).

Hold on because this one is a bit longer than usual:

😟 Problem

“The world has been creatively constipated…

🤩 Solution

and we’re gonna let it poop rainbows”

— Emad Mostaque @ Stabilty AI

⏳ How we got here

I’m gonna go real fast here - there’s a lot that’s happened in a relatively short amount of time, so I’m abstracting a lot away (for more, follow the white rabbit below):

There are 2 major developments (models) in the field of AI you need to know about to understand what all this is about after 2017: Generative Adversarial Networks (GANs) and Transformer models.

Generative Adversarial Networks

The field of Generative AI owes a lot to a young 29 year old back in 2014.

Ian Goodfellow, a PhD student at the University of Montreal, was having a few pints with his friends debating the likelihood of giving birth to a creative AI.

He left the bar to prove them wrong, and wrote a now famous paper on Generative Adversarial Nets. Ian now works at Google Brain, and the rest is history (in the making).

Here’s how I would summarise GANs to my grandma:

There’s been a lot of progress in the field of building machines that can think and create like humans. The latest development is Generative AI, and one of the greatest tools (models) that enables this are GANs - think of them like having a genie in your pocket that is able to create things for you.

Humans are now teaching machines through unsupervised or semi-supervised learning how to process huge amounts of data - to create original outputs.

This creative machine normally uses GANs (Generative Adversarial Networks) or transformers to achieve its goals.

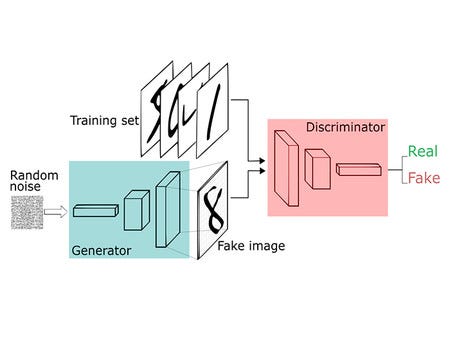

GANs are particularly useful at generating new data that resembles a given training set. For example, they can generate new images that look similar to photographs, or synthesize speech that sounds like a particular person (thanks ChatGPT).

Think of GANs as two opposing brains, that have been trained and live within this machine called neural networks, that are trying to outcompete each-other in a debate where only one can win. Essentially a zero-sum game. The output of this debate is an original creation.

Lets call the two sides of this debate the Generator and the Discriminator:

Generator: this neural network debater is able to create outputs on request as it has been exposed to the lots of data to learn certain patterns. But, in order to learn and get better - it needs help from its friend the discriminator.

Discriminator: this neural network debater has been trained to distinguish between the real-world data and the ‘fake’ data - think about the discriminator as the defence. Because the generator model gets rewarded every time it fools the discriminator, the model is able to improve without human intervention.

Transformer Models

“Transformers made self-supervised learning possible, and AI jumped to warp speed”

— Jensen Huang, CEO Nvidia

In 2017 researchers at Stanford developed a new type of architecture called Transformers in a now famous paper called “Attention is all you need”.

For your grandma - again, think of this invention as having a genie in your pocket that is able to create things for you, but this time, it’s better suited at certain types of jobs. Transformer models are good at tasks such as language translation, text generation, and text classification. They excel at understanding and processing sequential data, making them well suited for natural language processing tasks (thanks ChatGPT).

By “paying attention” - a Transformer model is used to help computers better understand sequential data, such as text or speech, by learning the relationships and dependencies between the elements in the data.

Relative to its older brother - recurring neural networks (which also hold their strength for different purposes) - Transformers are capable of processing all the elements in the sequence in parallel, which allows for faster training and inference. This in turn allows you to train them with larger amount of data, and produce much more accurate outputs faster. Which explains some of the current “hype”.

The key takeaways if you were to compare both GANs and Transformers:

GANs: better at generating new data.

Transformers: better at processing and understanding sequential data.

Different, but combinable: Both are different from each-other in architecture, learning process and use cases - but they can also be combined.

As a result of both these innovations - the field is moving fantastically fast.

Frameworks

Now, a few frameworks from smart people to help you map out Generative AI:

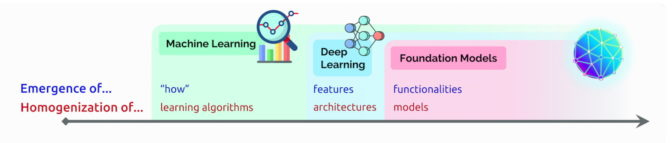

1. Technology Waves (Sequoia)

Wave 1: Small models reign supreme (Pre-2015): An era were small models excel at analytical tasks and become deployed for jobs from delivery time prediction to fraud classification.

Wave 2: The race to scale (2015-Today): You know this one! Google Research publishes Attention is All You Need. Transformer models are few-shot learners and can be customized to specific domains relatively easily.

Wave 3: Better, faster, cheaper (2022+) Compute gets cheaper. New techniques, like diffusion models, shrink down the costs required to train and run inference. The research community continues to develop better algorithms and larger models. Developer access expands from closed beta to open beta, or in some cases, open source.

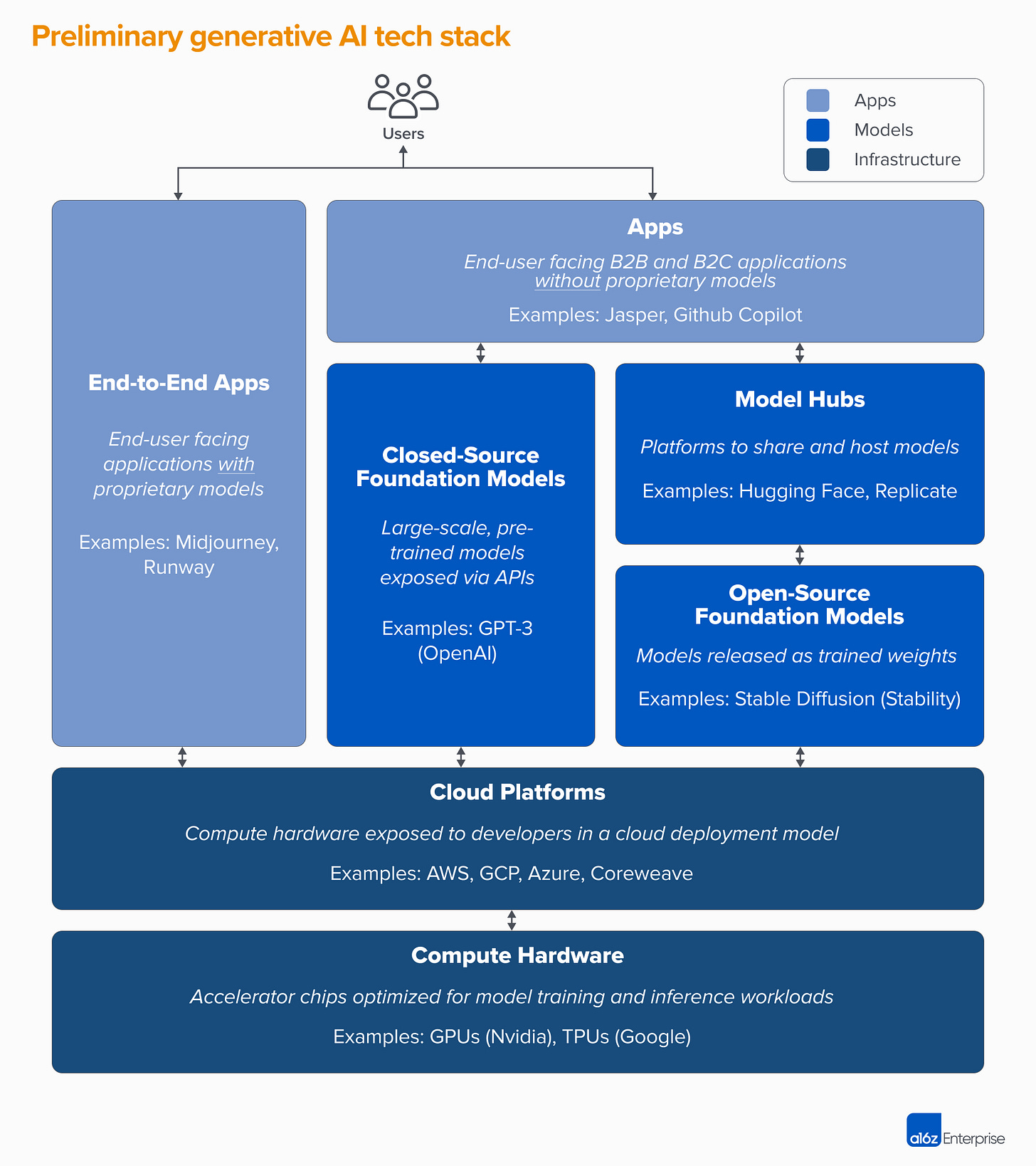

2. The Tech-Stack (a16z)

There are 3 layers to this cake:

Applications that integrate generative AI models into a user-facing product, either running their own model pipelines or relying on a third-party API.

Models that power AI products, made available either as proprietary APIs or as open-source checkpoints.

Infrastructure vendors (i.e. cloud platforms and hardware manufacturers) that run training and inference workloads for generative AI models.

3. Use Cases: Creativity, Productivity, [Insert Epic Idea]

Over the past couple of years we have seen predominantly 2 categories showing up in the application layer - with more innovative plays coming out every day:

Creativity: Deep Voodoo, Lensa, Midjourney, RunwayML, Alpaca, Riffusion etc.

Productivity: GitHub Copilot, Synthesia, Jasper.ai, Copy.ai, and Lex.

YOLO: i.e. Synthetic biology.

4. Business Models (Digital Native)

We’re seeing a little of everything pop-up across the application layer board:

SaaS: Runway

Consumer Subscription: Midjourney

Freemium + Micropayments: Lensa

Marketplaces: PromptBase

5. Moat (Stratechery & Sam Altan)

There’s a big question around how to build defensibility into Generative AI businesses.

Over the past couple of years the big winners have been the infrastructure vendors.

Application providers have also seen some wins, with some generating >$100M in annualised revenue in <12 months - but with incoming retention, differentiation and margin issues.

Here’s a couple interesting perspectives:

The Original App Store perspective [Stratechery]: It feels a little like the 2010 App Store era right now for Generative AI, with one-off cool, often single-devs doing millions of dollars en revenue. The beauty of the “original” iPhone vision was that you didn’t have to worry about moats. You could just build and Apple would take care of distribution + hosting, and that’s that.

The “middle layer” opportunity [Sam Altman]: Sam doesn’t believe in companies training their own language models, but does see a future where a middle layer emerges - companies taking a large language model built by one of the big names, and tuning it for a particular vertical.

6. The Big 5 (Stratechery)

How are they positioning themselves for what is to come?

Apple: most of its AR work has been proprietary (i.e. photo identification, voice recognition etc). BUT it has received a blessing from the open source world: Stable Diffusion. Long story short, Stable Diffusion is surprisingly small and is likely to be built into Apple’s operating system + accessible APIs for app developers. Having built-in local capabilities will deeply affect this market.

Amazon: the compay is itself a chip maker and may build dedicated hardware for models like Stable Diffusion to compete on price, and is also a major cloud service partner to Nvidia’s offerings (leading GPU manufacturer).

Meta: the company is making big capital expenditures in capturing the AI opportunity, with massive data centres, particularly targeted to personalised recommendations / content - delivered by Meta’s channels.

Google: the company invented the transformer, the underlying key to the latest AI models you hear about - and has long been a leader in using machine learning. Out of the big 5 described in this section however, Google’s search business is the one (possibly?) more “at risk” given the potential disruptive innovation that generative AI represents.

Microsoft: Mr. Satya and co. have a lot going for them. They have a cloud service that sells GPU, they are the sole cloud provider for Open AI, they may be incorporating ChatGPT-like results into Bing, Chat-GPT may be filling Microsoft’s productivity apps gaps (think Clippy but actually good) - and it all fits neatly into Microsoft’s subscription business model.

🚀 Market Map (Sequoia)

👌 Spanish Generative AI Market Map

P.s. Want access to an Airtable with 36 projects or want to feature your project? Drop a comment below 👇

⭐ My Favorites

Adept - Transformer for actions aka a large-scale Transformer trained to use digital tools — ex. was recently taught it how to use a web browser.

Riffusion - uses Stable Diffusion to generate music from text using visual sonograms.

Deep voodoo - deep fake, synthetic media company by founders of Southpark.

💡Final Thoughts

We might be moving (fast) from GUIs —> Language models.

We’re going to start seeing co-pilots for everything.

As far as an innovation wave, generative ai stands out for eliciting immediate joy among users - which seems very core to the human condition and stands apart from other hyped up trends. Creativity, freedom of expression will do that to you!

I hope this has profound implications to revolutionise education. I reckon our current system kills creativity and lacks personalisation. Generative AI could in theory dramatically reduce the cost of “artificial tutors”. Besides, if our education system doesn’t evolve (fast), we will run into a brick wall - just look at how fast computers are getting good at a lot of what we “test for” in schools i.e. Math, Reading, Writing.

So far GenAI is producing a lot of fun and “gimmicks” BUT - some of its applications could have profound societal implications. The model research pipeline looks wild - think of biology, drug development and so on.

🐇 Follow the White Rabbit

📈 Thinking

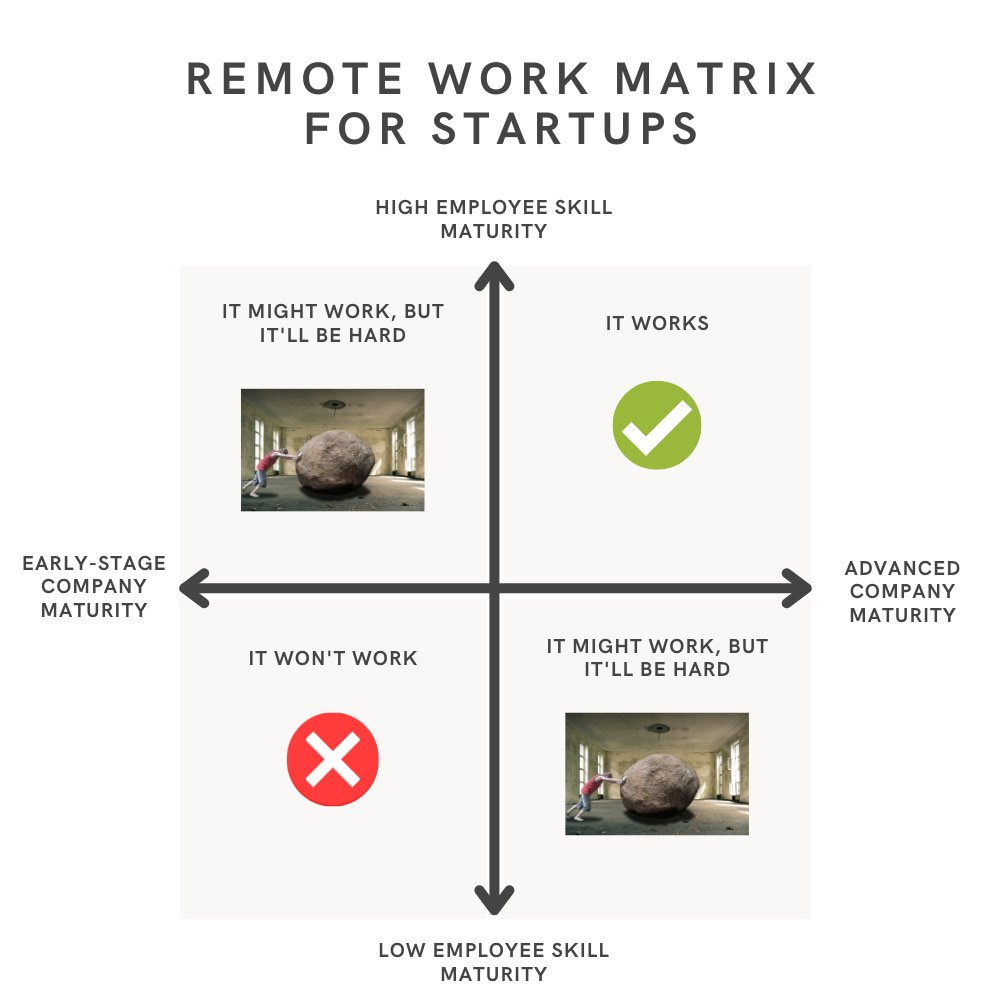

Does remote work for startups? It depends:

💰 Deals

You love startups and want to enjoy a Spanish lifestyle? Come join a Spanish startup.

Here’s a list of recently funded startups:

Fever raised >100M

Wallapop (marketplace) raised 81M

Kampaoh (Glamping) raised 16M

Twinco raised 11M

IEQSY (energy) raised 10M

Tetraneuron (ehealth) raised 7.5M

Kintai (fintech) raised 2.5M

Camillion (productivity) raised 1.8M

OKTicket (fintech) raised 1.8M

Argilla (NLP) raised 1.5M

Dawa (refunds) raised 1.2M

Sporttips (sport) raised 1M

Would love to receive the airtable for companies in Spain I am signed in and my e-mail is there. Thanks a lot !. Angel

Would love to receive the airtable for companies in Spain. Thanks! tgrbanner@gmail.com